Kernel synchronization

Understanding Kernel Synchronization

If a resource is being shared between multiple process at same time then we need to provide protection from concurrent access because if two or more thread will access and manipulate the data at same time then thread may overwrite each other’s changes or access the data while it’s in an inconsistent state. The process of maintaining multiple access to shared data at same in safe manner is called synchronization .

In this chapter we will discuss what is synchronization , why it’s needed, what is critical region and race conditions ,what is deadlock. In next chapter we will discuss various synchronization techniques available in Linux Kernel.

Critical Region and race conditions

Piece of code which access the shared data is called critical Region or critical section . If multiple process execute this code at same time then there might be chances of inconsistency of data. Hence System programmer need to ensure that two or more process should not execute critical section at same time. When two or more threads executing same critical section at same time is called Race condition. So to avoid race condition we need to use synchronization . Now let’s understand with example that why we need protection

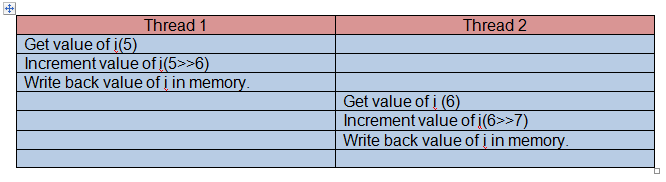

Consider a global integer variable i and a simple critical section which tries to increment the variable. Let’s assume two thread A and B tries to enter in critical section at same time and initial value of i is 5. Below is the expected operation

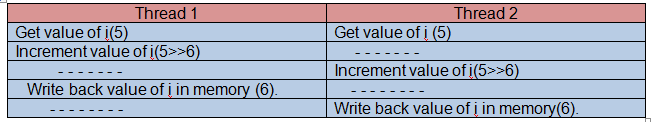

according to the expected execution 5 is incremented twice and the result value of i is 7. As two threads are executing in critical section at same time then there might be chance of below execution

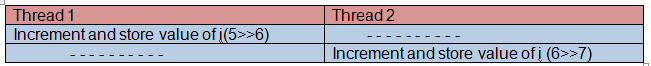

as we can see in above image thread 1 and 2 modified the same variable i at the same time , there might be outcome that result value of i will be 6. This is one of the simples example of a critical region and hence the outcome of it’s also less harm full. But in kernel we might come across very danger situation which can also effect performance of system. So to avoid these kinds of problem , programmer need to restrict simultaneous access of critical region by multiple process. One possible solution of above problem is to performing reading , incrementing and writing back value to memory in a single instruction as below

Atomic operation are special operation in which processor can simultaneously perform multiple operation like read and write etc in same bus operation . Atomic instructions are supported by all the processors. But by only usage of atomic operation , our problem of race condition doesn’t get solve in every case. Let’s see little complex example.

Assume some kernel buffer which contains some important data. This buffer is modified or filled by some producer process A and data is read or taken by consumer thread B . If any time producer is updating or manipulating the buffer at the same time when consumer thread is reading the buffer then there are chances of inconsistency of data . By using the above discussed atomic operation solution we cannot avoid critical section in this case. We need to find out a different solution in which can restrict a processor to read and another processor to write at same time.

One way is needed which make sure that only one thread can manipulate the data structure at a time. one mechanism for preventing access to a resource while any other is in critical section and accessing that resource is lock.

A lock works like a lock on door and room behind the door is critical section . If a process tries to enter in the room the while room is empty then that process gets permission to enter in the room and lock the door from inside. Perform its task inside the room and after finishing the work , it leaves the door and unlocks the door. If another process tries to enter in the room while room is already occupied by some other process then it must wait for the thread inside to exit the room and unlock the door before it can enter.

example

above problem of buffer can be solved by using of lock. Any thread which wants to read or write the first it has to get the lock for accessing the buffer. If lock is granted then only process can access the buffer otherwise thread has to wait till the time when lock is available .Linux kernel provide different way of locking mechanism. we will discuss various kinds of mechanism provided in next tutorial.

Cause of concurrency

In linux kernel there are various reason for concurrency to occur

1)Kernel Preemption

As Kernel got preemptive after 2.6 version . It means one thread can preempt another task. The task which is newly scheduled might be executing in same critical region.

2) Interrupts

As interrupt occurs asynchronously any time , which interrupt the execution of current task.

3)softirqs and tasklets

The can raise or schedule a softirq or tasklet any time, interrupting the currently executing process.

4) Symmetrical multiprocessing

Two or more processor can execute same kernel code at same time which can cause race condition.

5)Sleeping

A task in kernel can sleep any time and ask kernel schedule to schedule new process.

Before writing code developer should indentify all the possible cause of concurrency . If all the reason of concurrency well known then its very easy for Kernel developer to avoid the race conditions.

Knowing Which data to need protection

Developer has to identify which part of data need protection . Generally its very easy to identify the data which needs protection . All the local variable which are specific to some particular thread they don’t need any protection. But all the global variable which can be accessed by other processes also needs to be protected . Below are the other possible data which needs to be protected

1) Data shared between process context and interrupt context

2) Data shared between currently executing process and new scheduled process who preempted the currently executing task

3) Function or code which can be executed by two or more processor at same time.

Deadlocks

Deadlock refers to a situation in which two or more threads are waiting for each process to release a resource . Because of which neither of process get chance to proceed further as the resource for which all the process are waiting will never be available .

For example if a process tries to acquire a lock which its already hold will result a deadlock. As process will never release the previously held lock as its waiting for other lock to acquire.

There are various techniques of avoiding the deadlock. We will discuss deadlocks and various method of avoiding deadlock in other tutorial.